This is an opinion piece about the strategies of the two major opponents in the spatial computing device field. I am leaving aside the feud of Mark Zuckerberg and Tim Cook about their business models and views about user privacy and rather focus on hardware and software related concerns. I also have mixed feelings about both company’s strategies. Despite this, I try to be as dispassionate as I can and I hope to give an overview of the industry’s state and why it matters.

Meta is a social media software company, which acquired a tiny hardware firm called Oculus with a great vision back in 2014. Within 10 years this division has made an astonishing first move with a new category of hardware, an affordable Virtual Reality device, and it managed to position itself as market and technology leader. Back then, the primary goal of this deal was for Facebook not to miss out on the next computing platform, so they could define the way we communicate, work and play and not leave this in the hands of the two major players of the mobile phone era: Google and Apple. Today we can ask whether this goal or parts of it were achieved. While those two mentioned before were basically absent in regards of a device for the end user, Facebook could test and try its new head-worn devices without any noteworthy interference from competitors. Other hardware companies, mainly HTC and Pico, who also wanted to access this area, basically copied every aspect of the software/hardware stack and could not deliver the same level of quality yet. The most notable outcome of this development is the Meta Quest and it certainly is a fantastic device, considering its price and capabilities.

Meta Quest Pro – Metas most advanced Mixed Reality device – Source: Meta

On the other hand, there is Apple, the world’s most successful computing company which has been building hardware products for over 40 years. Within the last 10-20 years Apple managed to close down and streamline every aspect of the product, built increments and new ones, perfected the user experience of their wearable device-palette and broadened the product ecosystem from hardware to accompanying platforms and even content (Apple Music, TV). But it did not deliver an XR product or communicate anything up to now. The only thing Apple does not have is the income stream of the ad-machinery of social media or a search engine. We can therefore say that the strategies of both companies were diametrically opposed in terms of what they want to deliver to the customer.

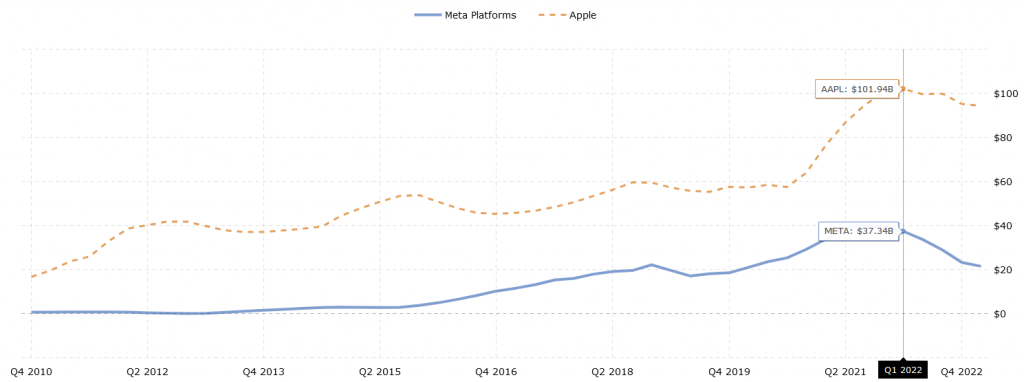

Sidenote: Apple’s current quarterly net income is around $100 billion with a valuation of $2.8 trillion at the time of writing, while Meta pulls in around $20 billion at roughly $700 billion market cap.

Source: MacroTrends LLC: https://www.macrotrends.net/stocks/stock-comparison?s=net-income&axis=single&comp=META:AAPL

With XR becoming the next computing platform, we should see the cards being reshuffled. But as we are about to see Apple’s market entry with an actual product on which both companies compete for the same kind of customer, I wonder whether Meta used its time and resources efficiently enough to be really able to compete in Apple’s domain. Can Meta or any other tech giant ever break through this walled garden and deliver on the promised definition of the next platform?

So here’s what I think would be crucial to deal with, if Meta truly wants to be on par with the giant for a sustainable success in selling what it has in prospect:

1. Fixing marketing

Mark Zuckerberg argued last week that Meta’s vision looks completely different from what Apple has been showing, although the purpose and the capabilities of the latest devices are roughly at the same level. Meta is strongly betting on the social aspect of shared virtual worlds and open ecosystems and aggressively promoting this as “the Metaverse”. The purpose of this product is not yet accessible to everyone and hardly understood by anyone and I frankly cannot see much of a difference wearing a Quest Pro versus an Apple Vision Pro to enter the Metaverse. Watching it from a marketer’s perspective, Apple interestingly left their usual approach on how to communicate this product category. They knew that they can’t sell something where the purpose is not yet understood by Joe Average and therefore they marketed a “face computer”, which can be grasped more clearly than vague possibilities of a device that lets you enter an ominous “Metaverse”. Apple’s communication went from “Thousand songs in your pocket” to a relatively geeky product explanation. They did not position it to sell it to the end consumer, but to the developers who still have to crack the code for experiences which really make spatial computing inevitable and eventually even those shared social XR spaces, which Meta envisions. Although the placement of multiple virtual screens is not the most compelling selling proposition, its benefit is much clearer than walking around and playing in a virtual environment as cartoonish avatars. I argue that a “killer” experience can not be marketed until headsets become glasses, which can be worn outside of your home for the full duration of a day.

2. Getting control over a development platform

In my opinion, Meta was very much stripped of their weapons when Apple released the M1 Chip along with the ongoing deployment of XR features like the Photo Scanning Apps with LIDAR and Room Measurement Apps on iOS devices to millions of users without actually having any XR glasses. Meta might have built an ecosystem and a great product, but it is not controlling the computing platform from end-to-end. In favor of fast market entry, (and maybe an “open ecosystem”, which is hard for me to believe) they built their product on top of Qualcomm’s Snapdragon chips and Google’s Android. If you are building Apps for Quest natively, you need to download the Android SDKs, which are maintained by Google. If not, you are probably using Unity or Unreal, which makes Meta reliant on providing APIs for those. Meta`s most relevant core patents are probably limited to Computer Vision and AI. Apple instead has managed to build a completely closed platform, where they not only control the marketplace but also own the software and hardware stacks including an ecosystem for mobile device development. With Xcode, Swift and Reality Composer, Apple has complete control over dev tools. The introduction of the M1-Chip was the actual revolution, because from now on, they build their own mobile chips, making them even independent from chip development. In the long run, Apple will probably have the competitive advantage, setting standards in User Experience, deploying new features and bringing down hardware prices. Apple can ship and sell the M-chipseries with almost any of their products in 10s of millions and tweak the capabilities towards their liking, while all the other headset companies have to wait for Qualcomm to develop and streamline the XR-chip-series based on feedback from devices two generations back. This situation reminds me of the days, when Microsoft Windows had the problem of stability when integrating all the different hardware vendors to their OS, while Apple just relied on very few selected components, leading to the success of the MacBook and the iMac. The history could be repeated, only at a much larger scale.

3. Creating its own operating system

Apple’s newly introduced visionOS will be a tightly integrated software/hardware symbiosis, just like on the iPhone/iPad and it is based on years of improvement of mobile user experience and performance. We can expect great advancements in UX and UI design for spatial computing input systems (the old term would be “human computer interface”), optimized for performance, stability and usability. On the Vision Pro day-to-day Apps and productivity tools are sitting side-by-side. This is something not yet possible on Android. Meta created it´s own OS as a derivative of Android. For it to succeed Meta might seek a tight collaboration with Google. We might see Google and its Material Design standards to be transferred towards a spatial computing OS some day, but it is not very clear how committed they are to doing that soon. We will see if Meta then keeps on going with their own OS or if they open it up to PlayServices, once Google entered the spatial domain. And this to possibly come is also reliant on a very tight and prolonged collaboration of Android with Meta and Qualcomm. The quality of user experience will always be dependent on the capabilities of the Android SDK and how well Qualcomm´s chip design can handle sensor data next to the visual processing. From today’s perspective Meta will always rely on them, as I haven’t heard of any Meta-Chip. The disadvantage is that high level implementations of input systems are developed (for example by Unity) in collaboration with the hardware vendors or on top of OpenXR and they probably won’t see as fast advancements in design, performance, stability or precision, because they need to go through all those stacks and different hardware layouts in a kind of democratic way. We see and feel this today in the different quality stages of the different home environments regarding input, tracking, UI/UX design etc. Apple on the other hand just flips some switches and is then able to deploy and test new standards for user experiences with every update of the visionOS, while it directly ships new capabilitys directly to tevelopers via Xcode. To me, this sounds like a very autocratic approach. I am tempted to compare Apple with China, as Apple Vision Pro won´t have a choice but visionOS .

3. Definition of a complete content creation pipeline

Meta is part of the Khronos group consortium for the open glTF-standard. The standard is primarily open and relevant for realtime-3D content in Web browsers or game apps, it could be considered as the JPG for 3D Assets. There is this trend that the browser will become the engine for displaying spatial 3D content, thanks to glTF and WebGPU, hopefully performant enough to become relevant. This is great for a possible spatial computing adoption. But it will still be an intermediate stack which has to serve all kinds of hardware. Going this route probably means that we are far off from high quality experiences over the web, as this still has a lot of limitations, primarily the needed bandwidth. On the other hand we need to consider how simple content creation pipelines need to be, for the spatial web to flourish. Today, it is still very hard to find user-friendly photo scanner apps on Android or simple 3D scene generation tools which would not need a master´s degree in 3D design to get a usable result from them. If you want to overcome the user generated content-gap, you have to hope for Generative AI tools to emerge fast enough to create spatial (3D) content (like the evoltion of NeRFs and GaSps), or you have to think further. And here comes Apple. They silently went to build another 3D file representation standard, building on top of USD and their own hardware-accelerated shader API for 3D objects, called Metal, which is tightly integrated into their chip design. The USD format is a pipeline format for the production of complete 3D scenery in high quality productions, made by Pixar. AAA content is handled through it anyway and it is the foundation of NVIDIA Omniverse. Omniverse itself is a great vision for how the actual Metaverse might be technologically achieved and built. A specifically designed package format of USD called USDZ can be used as Augmented Reality 3D format and Apple already integrates it on OS level through Quick Look. There is a tight collaboration with Otoy´s Octane, which is a physically based renderer that achieves photorealistic environments on Metal. What we are seeing here is a completely designed pipeline standard, and I am not sure if Gltf will withstand the rising of of USD with upcoming improvements of hardware performance. We can expect virtual worlds that were created for cinematic production to easily be adapted to the Vision Pro as super real environments, small games or marketing apps for major entertainment brands. The outcome will presumably be a lot closer to what a Metaverse will be driven by than those fragmented social VR apps today, built on a variety of open or proprietary standards. Meta does not yet have a seat in the Alliance for OpenUSD. Next to this, non-technical content creators already have access to simple 3D asset generation on iOS devices thanks to their depth cameras. (Like LumaAi) The Vision Pro is even capable of spatial video and photo capture. Wide adoption for spatial computing only makes sense if you present an intuitive way to produce spatial content and easily deliver it to your audience. The success of GenerativeAI content creation will probably also have a huge influence on how 3D experiences will be created. This may be Meta´s chance to address the cases where it lacks behind.

5. Creating AAA content partnerships

The announcement that Disney would collaborate to create content for the Vision Pro could be considered as eye opening, because Meta did not manage to pull in such a high profile deal yet. In my opinion the greatest mistake Meta could make was to close down the app store and the very bad up to even non-existent business strategy. The Oculus store tried to mimic Apple’s App Store and only let quality experiences in, while independent developers had a hard time to deliver business-only experiences for Quest. Today, Meta’s store is primarily considered as a game store and occupied by small to medium developer studios, which lacks AAA content due to the low install base and monetization capabilities. Secondly, I am not aware of a vast app ecosystem on the Oculus store, providing the most notable productivity apps except from a Zoom and Microsoft-partnership, Gravity Sketch or ShapesXR. For original content, Meta probably has lost a lot of developers to Pico due to the fact that Pico opened their system and hardware early on with a great and affordable business support. On top of this, Meta struggles with reputation. Getting important big content deals or pulling exclusive titles will probably be a lot harder when you compete with Apple and Sony, especially when the biggest entertainment brands on earth belong to Disney. Plus, Apple has its own content streaming service, which enables it to deliver unique or exclusive content. Beat Saber won´t save Meta forever. You might argue that most experiences are going to be interoperable thanks to Unity and their huge developer base and therefore be available on both systems. Probably yes, but take a look at today’s mobile landscape: Many high quality applications for example for music composing, image and video editing or general content creation usually have seen a release on the Apple App Store first and Google Play Store followed later due to the vast amount of devices the developers had to consider.

6. Show-casing a feasible avatar solution

This is probably tightly connected to the Metaverse disaster, but I feel like Meta has bet on the wrong priority. Having legs is simply not the decisive thing for interaction in virtual environments. It is the face. Apple showcased a simple solution to calibrate your face as an avatar and gives you a simple way to integrate it in the most relevant remote communication platforms. Admittedly, there are some people who had the chance to test it, and are saying that it suffers from the uncanny valley problem. But they will have time to fix this. Meta´s avatar system will long be stigmatized with the unfortunate picture of a cartoonish Zuckerberg in front of the Eiffel tower and the marketing for Horizon. Meta´s detailed face solution has yet to be proved as feasible. They may come up with something, but Apple beat them on that for now.

Edit / Update in October after Meta Connect 23: The Coded Avatars demo of Meta really stood out and might put Meta at the forefront of creating photorealistic avatars beyond the uncanny valley.

7. Getting integrated in the daily life of a user

Even if some people might say they could not live without Instagram, social media is not a crucial or irreplaceable component to one’s daily life-routines. In fact we´re now seeing social media platforms come and go. If you want to be indispensable, be anchored in someone’s life with things that matter: control payments, home appliances or the place where people search for information. Apple has a relevant payment solution. The wallet is already connected to your user account and therefore directly to the app ecosystem. It is the third most relevant payment solution on external websites and you can buy your groceries on a physical store with it. Next, I guess a spatial computing device which is used in your home environment should also give possibilities to control your home appliances to some degree. While Apple did not mention any concrete use cases that combine their solutions, we can assume Apple will achieve some sort of combination with the upcoming Siri. With Siri and the Homepod there is at least a working solution and are probably easily translated into their spatial computer as an assistant for internet search and home control. Meta claims to develop a similar AI driven assistant, but there is not yet a concrete product. The announcement of integrating GPT into WhatsApp and the rumors to integrate Llama 2 into Quest 3 might be a step towards its own possibilities, although I cannot see the big picture yet. In the worst case, Meta would be relying on applications and developers that integrate the Home SDKs and AI assistants of Amazon, Google or even Apple, because people simply already have them at home.

Edit / Update in October after Meta Connect 23: Meta´s route of creating a platform for AI assistants is exactly what I meant. This is the only way how Meta has a chance. Integrating them into their apps and into Ray Ban Stories is simply genius. But Zuckerberg also made quite clear that Meta still has not a clou what good this might be for – except for “fun and entertainment”. Putting AI tech on the market unregulated like this is probably as crazy as Oppenheimer`s delivery to the military in 1945… I am not exaggerating here. The implications of AI in the daily life of the average user will be highly transformative to our society. Hopefully we will see some form of regulation kick in.

8. Developing a geo-spatial solution

Monetizing the geo-located digital overlay of the future will rely on scanned earth data and it would certainly fit into Meta’s main business model. Google is undoubtedly the number one and in my opinion the release of the geo-spatial API marked one of the most important milestones for the spatial computing era. I have just learned that Apple Maps comes very close to Google Maps in terms of visual fidelity and also data. Geo referenced data will be king as soon as we are talking about the AR cloud, persistent anchored experiences and the use of XR devices outside of your home. Meta is for sure also collecting and putting data on a map, but is there a concrete plan for developing and deploying a Visual Positioning System like Google does? What are the plans with Meta Spark? I am not aware of one, it would need point-cloud datasets of the world and a sophisticated cloud infrastructure. For now with Apple Maps there is at least an application which can be optimized on OS level and to some degree makae Google Maps irrelevant on Apple devices. Considering that, Apple will certainly be able to take part in the advertising market for geo-spatial social experiences, using its own data and applications if it wanted to. We will have to see if Meta will be able to pull off its own geo-spatial API or if it will compete with or rely on Google, Snap or Niantic who already tap into this with with apps at their customers fingertips. Meta may be able to crank up the capabilities of Meta Spark, but brief research showed me they are far behind Lens Studio. If we see a geo-spatial Instagram, I might see a chance for Meta to keep up their most relevant income streams.

9. Deal with spatial audio on a more serious level

Apple laid the foundation for spatial audio experiences already, without the need for glasses. They propagated a 3D audio format through Apple Music and collected physical data on audio usage with the technology pumped EarPods. And the fact that they communicate the importance of sound at the keynote through an understandable visualization of audio raytracing, showed that they considered every aspect of an immersive experience. The learnings they made from the EarPods will be extremely helpful to craft an immersive display. The immersive experience will only be good enough if the auditive overly is as good as the visual overlay. Meta might have had a chance to dig into this with the RayBan collaboration. But they decided not to focus as much on possible spatial audio capabilities, but rather on a privacy diminishing video recording capability. I am not sure if that was really helpful for future development except for testing technological advancements in a small form factor. Anyhow, there seems not to be a lot of interest by the customer. I clearly see a missed opportunity here.

Edit / Update in October after Meta Connect 23: They addressed this with Ray Ban Stories 2 now.

10. Making headsets wearable

Meta has not managed to solve the comfort problem as a whole, although they could have gotten enough customer feedback through their shipped products. In my opinion this is the most relevant thing to address and they did not manage this well enough. For headworn devices it seems very obvious to me that weight should be offloaded from your head as much as possible. Meta missed out on this and is soon releasing their 3rd generation Quest and still putting that weight to the front with a head strap that yet again will probably make my head hurt. Apple’s strap design may look like it’s made by Woolworths, but it still appears very cozy. In contrast to the opinion of most, I am in favour of the external battery pack and I doubt that it is a big constraint for usage. Being able to wear a headset for more than an hour without a headache is key to driving adoption, even if this route forces you to cut back on design and competitive pricing. It’s too early to hand over the award to Apple here without trying it myself, but the fact that you can integrate individually adjusted prescription lenses and face masks in the first iteration of the product, shows that Apple knows that the most crucial thing is usability and it is caring.

Apple Vision Pro – Apples first Mixed Reality device – Source: Apple

Now, can we give an answer whether Meta managed to define and create the era of the next computing platform for communication? I´d say in parts, yes, but it also missed out on a lot of opportunities where Meta could have put it´s stamp on. In a lot of ways that gave Apple the possibility to prepare and completely think through almost every aspect of a spatial computing platform. For now, Meta stays dependent on Google, Microsoft and Qualcomm regarding such a platform’s core features: Operating system, development platform, cloud, apps, productivity tools and the compute units aka chips/silicon and the software. This will make it difficult to close the gap of progression speed. But I get it, it is obviously hard to build and address all of those things, when you start out as a software company. So what was left for Meta to do when the behemoth finally stood up to enter the game? It tried to convey the “killer”-app in the form of a grand vision aka the Metaverse. But in the end the Metaverse thing simply ended up as one of the greatest marketing campaigns to distract and keep up attention. It has not “zucked” many people in. Goliath just waited until the dust had settled and presented a very well thought-out concept.

An entire industry expected the market entry of Apple would create the “iPhone-moment for XR”. Does this mean that nobody actually believed that Meta could pull it off by itself beforehand? Is Apple in power to dominate an entire new industry now?

Apple created an impenetrable firewall which is really hard to crack. It has achieved something no other company on this planet was able to do: it is controlling an entire platform under the umbrella of a single brand. It is even controlling income streams of third party apps and social media platforms, as being a gatekeeper for those. It has the ability to create the best products and user experiences and we can assume that it will widen its user base even more. At least we can say that the Apple Vision Pro announcement certainly showed that Apple is taking this platform seriously and we do see a crowning moment for the XR industry as a whole. But it will probably take Google, Samsung, Microsoft, Qualcomm and Meta to join forces to set something against their power. Regarding the “iPhone-moment”, it will take Apple four iterations of a product in the market until mobile internet and communication undergoes a substantial transformation towards the propagated spatial use of the internet, But yes. this is the start of a moment. In the meantime, Meta has some time left to fix some things and position itself. I’ll quote the XR community´s famous “5-10 years from now” until we see the outcome of this development.